An idea i had a while back that never really came to fruition. While working with Chinese-manufactured prototypes of varying quality for a stator housing, there were several instances where i wished for a simple, lightweight, portable and fast way to measure both flatness and alignment. Many moons later, the idea came back and also gave me a reason to fiddle with DIP again.

Introduction

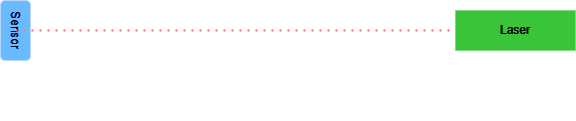

The basic idea is to take a webcam (or any digital camera with a CCD/CMOS sensor for that matter) and remove the lens, thereby exposing the sensor directly to incoming photons. With no refraction from the lens, you get an extremely accurate representation of the incoming rays of photons hitting the sensor – which can then be used for potentially useful things!

By shining a bright, concentrated beam of light directly at the chip (wow a laser!) it is possible to get a reading of viable data that is incredibly accurate in respect to the source – in fact down to µm accuracy.

This concept in itself isn’t entirely new of course, laser beam profilers have been using the idea of shining a laser at a sensor for many moons (added bonus, you can use this for diffraction visualization too!) but I’ve not seen it used in this scenario before. Possibly because it’s a bad idea and I just haven’t realized it yet.

Any modern sensor will have a resolution of VGA to FHD and beyond while the CCD/CMOS die itself is usually no more than a few millimeters wide. For my current setup I’m using a dirt cheap webcam with a VGA (640×480) resolution and a 3mm wide chip which gives me a resolution of:

$\frac{3}{640} = 0.0046$

4.6µm, which is surprisingly good for such a cheap camera. Using a FHD chip would give you an accuracy of 1.5µm(!)

New beginnings

I tested the first proof of concept of this back in mid-late 2020 but I never took it further than a simple webcam feed with basic image processing to de-noise and smooth out features.

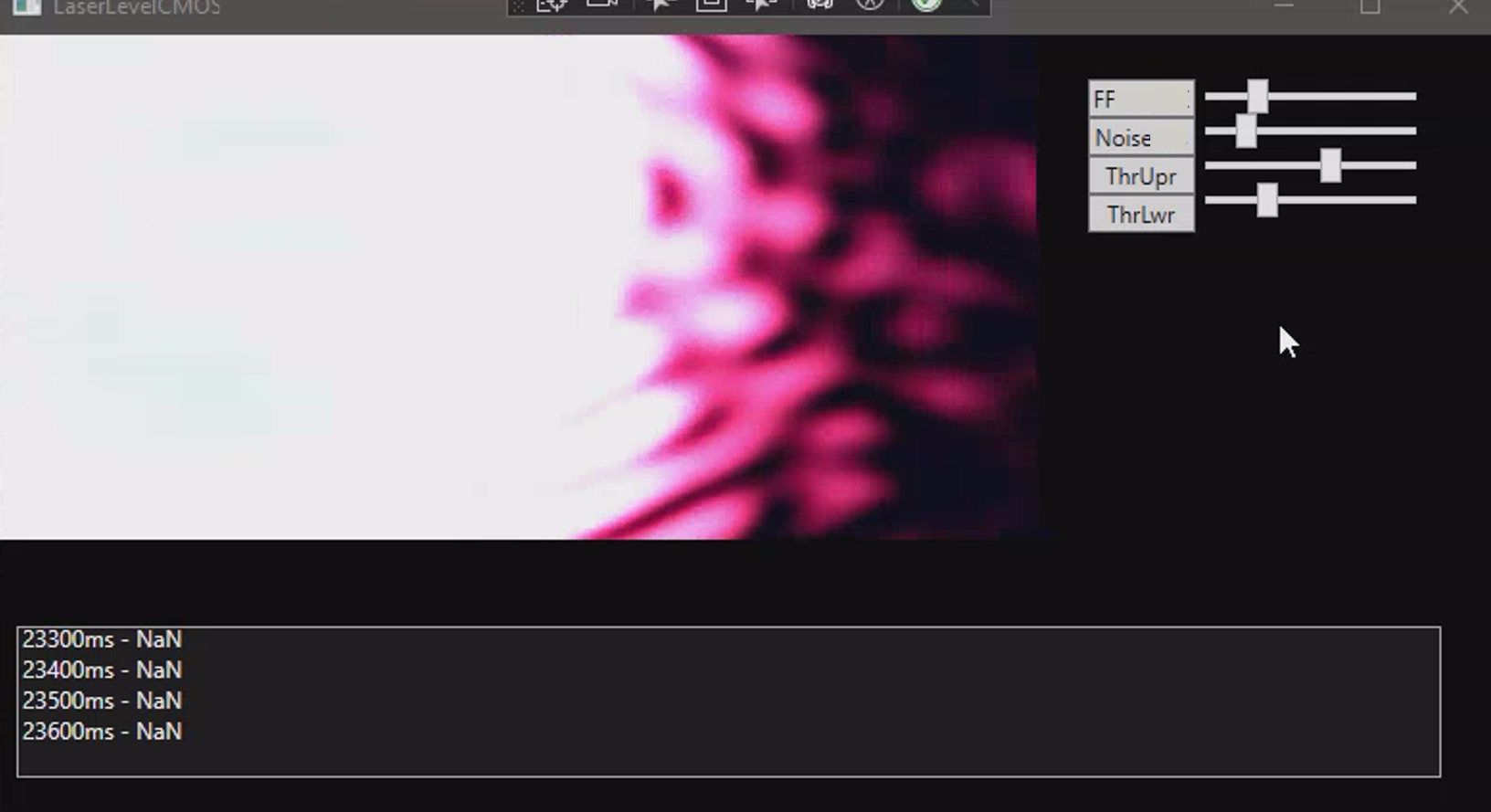

Recently I once again wanted to give this idea a more thorough testing and wrote up a quick C#/WPF program to test things out.

Unfortunately i found the existing (free) imagefeed/directmedia support for WPF to be severely lacking, bloated and clunky. Further evidenced by the diagnostics, not a huge issue for my testing purposes but there is definitely a memory leak somewhere in the directmedialib I used in order to present the camera feed. This made me consider turning to QT until i was notified about SDL3 now being rolled out – which would be a dream to try out for this application. SDL2 was on my mind prior but i found it to have rather cumbersome features the last time I tried using it.

New new beginnings

Having built and set up SDL3 for a windows C++ project, I spent some time getting the basics up and running. I then proceeded to implement OpenCV in order to more easily work with and alter the image feed from the webcam.

The reason for choosing SDL over QT was mainly due to “why not?” as well as to have far more low-level control to take care of as a learning exercise. Additionally, giving me a wider choice of GUI implementations and options is very helpful for both this and other projects.

OpenCV does indeed come with its own GUI, aptly named HighGUI, which does give you easy access to high-level event handling and window creation, it is really not suited for more involved UI work and customization.

This is where SDL comes in handy! By exposing and having access to most low-level features of the media layer, it is quite easy to access raw image data and pipe it through OpenCV for processing. One issue (as is always the case) is that OpenCV uses BGR ordering, whereas SDL (and seemingly every other framework on the planet) uses RGB.

This was a relatively easy fix. I easily remembered how to convert from one color space to another with no hiccups nor brainfarts.

cv::Mat SDLsurface_to_mat(SDL_Surface* frame_data)

{

int width = frame_data->w;

int height = frame_data->h;

int depth = frame_data->format->BitsPerPixel;

//2 channels YUY2 format being weird

cv::Mat mat(height, width, CV_8UC2);

Uint32* pixels = (Uint32*)frame_data->pixels;

uint8_t* matData = mat.data;

// convert data from Surface to Mat

for (int y = 0; y < height; ++y) {

for (int x = 0; x < width; ++x) {

Uint32 pixel = pixels[(y * frame_data->w) + x];

Uint8 r, g, b;

SDL_GetRGB(pixel, frame_data->format, &r, &g, &b);

matData[(y * width + x) * 4 + 0] = b;

matData[(y * width + x) * 4 + 1] = g;

matData[(y * width + x) * 4 + 2] = r; //wtf?

}

}

return mat;

}Or you could learn to RTFM and just use the built in methods. Oops.

Mat SDLsurface_to_mat(SDL_Surface* frame_data)

{

Mat tempMat = Mat(frame_data->h, frame_data->w, CV_8UC2, frame_data->pixels, frame_data->pitch); //current specific webcamera has YUY2 format, cba writing checks for each potential format

try

{

cvtColor(tempMat, tempMat, COLOR_YUV2BGR_YUY2, 3);

}

catch (const std::exception& e)

{

SDL_Log(e.what());

}

return tempMat;

}After converting the surface/raw image data to a format OpenCV accepts, it was just a matter of converting the imagedata to grayscale, setting up a convolution kernel to traverse the data and implementing simple thresholding for a preliminary test.

And voila, after taking the processed image and returning it to RGB color space and a format SDL accepts we can display the now-blurred colored image of the grayscale image we are processing!

SDL_Surface* mat_to_SDLsurface(cv::Mat mat_data)

{

cvtColor(mat_data, mat_data, COLOR_BGR2RGB, 3);

SDL_Surface* surface = SDL_CreateSurfaceFrom(

mat_data.cols, //width

mat_data.rows, //height

SDL_PIXELFORMAT_RGB24,

(void*) mat_data.data,

mat_data.step

);

return surface;

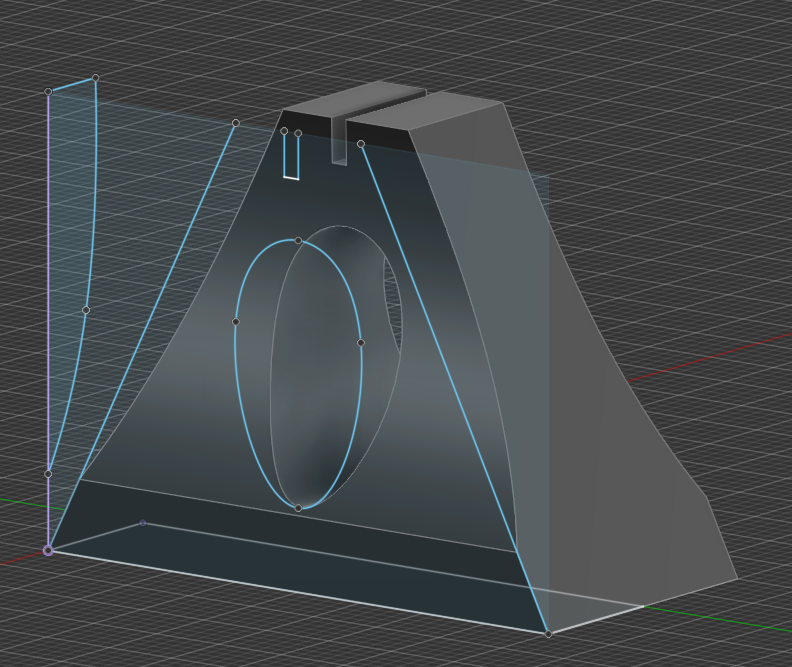

}The incredible diagram below shows the setup, currently using a clamped laser pointer as i don’t have a laser level at hand – very professional, I know. The distance from the emitter to the sensor is 100cm and resting on top of my table that measures 2.5cm in depth – rather sturdy.

The videos show the very visible flex and distortion of the table under very light load. One finger barely pushing down harder than a keypress results in a significant readout. I 3D printed a (relatively) flat cradle to put the sensor (relatively) perpendicular on top of in respect to the surface it is mated with.

Obviously doing this in a non-machined and properly tested fashion will yield some errors in the result as both the die surface and emitter origin may be slightly tilted, skewed etc – but it does show that it indeed works (and very well at that!)

One funny and annoying thing that happened while testing at first was a very unsteady and shaky output. I at first thought i may have done something wrong when blurring the image or during the conversion, making me shift pixels off-by-one-or-a-few through each frame or something similar.

Turns out the fan i had running on the floor behind me was causing minute vibrations to propagate through the floor, into the table the sensor was on and slightly shaking it – which also happened if my neighbor was trampling around their appartment – which was a rather interesting revelation!

From the video above, a fixed point on the thresholded image moves 38px in vertical space, since we have a resolution of ~4.6µm this means the table was distorted by roughly $4.6µm * 38 = 174.8µm$ or roughly 0.175mm(!) This is assuming that we have a perfectly perpendicular sensor-to-emitter setup but even if it’s slightly off it’s far better than what most measuring devices are able to measure.

Future endeavours

Seeing as the proof of concept worked extremely well, I will implement UI and some more functionality to fine tune the results as well as proper logging and visualization of the data.