Due to confidentiality restrictions I can not post the final document nor source code.

For our bachelor’s project and thesis we (project group consisting of me and 3 other members) were assigned to develop and document an application for an Android phone that could photograph the inside of a fodder-silo autonomously at given intervals and determine the level of fodder remaining in the silo. Personally, I’d much rather use a SBC of some sort to handle this task, but I digress.

The core principle of the application is to let the user assign one or more times a day for the application to take a photo and return the fodder level. As the project itself was to be considered a Proof of concept, the majority of time spent developing and testing was used to gather information and plausible solutions to the problem, as this particular approach has not been used beforehand.

The main issue with this specific approach is how one would manage to get information regarding the volume of a container using a single photographic device. If the phone had stereoscopic cameras (as many newer phones do) it could possibly be as easy as triangulating the distance to a shared point in the two images, but seeing as our test-phone only had a monoscopic camera this was not applicable.

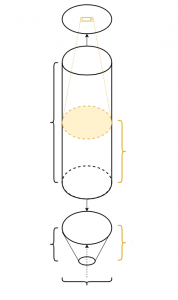

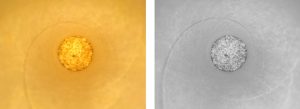

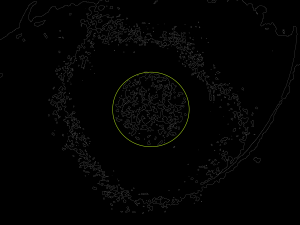

By using the diameter of the silo, height and height of the cone in the bottom – all data put in by the user – the application is able to process the image using OpenCV’s built-in methods and isolate the view of the fodder circle as shown below.

By utilizing the camera’s properties (focal length and sensorsize) and the width of the circle in pixels compared to total image height, one can calculate how far away the fodder is from the lens itself. Once you know this value it’s a simple matter of calculating volume for the remaining fodder to find the final level. As a PoC we managed to show that it does indeed work, but requires a whole lot more work to make it fully functional and reliable under any and all conditions.